How do LLMs remember? Copy

By now we all know that LLMs make stuff up when they are stuck. We turned to expert Shahed Saleh at CoLab to tell us exactly how CoLab solves for that.

The Expert

Shahed Saleh

Senior AI Product Manager

What she does all day: "I strategize with my amazing R&D team on how our customers can use AI to support their product review process."

Real-world roles: Designing social and industrial robotics systems, DarwinAI

From: https://newsletter.maartengrootendorst.com/p/a-visual-guide-to-llm-agents

What’s the issue:

It used to be that computers only did what they are told. AI changes that. It’s a famous line in David Cronenberg’s The Fly, a great movie in part about technology: “Computers are dumb. They only know what you tell them.”

Today, that isn’t true. Because even today, they don’t even remember what you tell them.

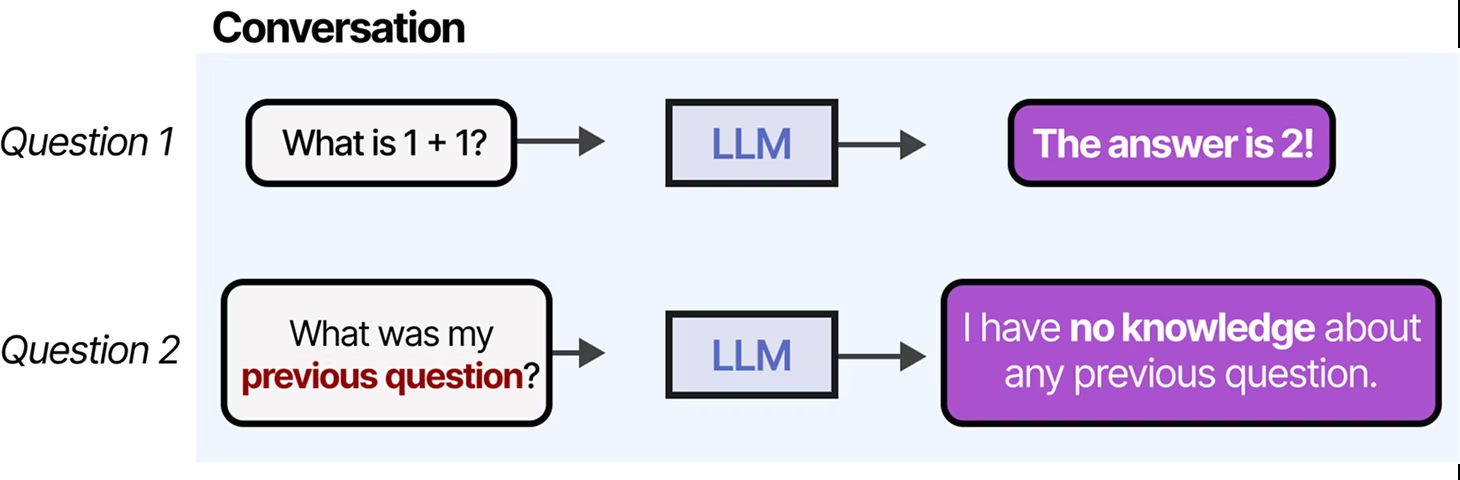

At least LLM’s don’t. Anyone who has even worked with ChatGTP knows that they don’t accumulate knowledge as they work. Instead, their decision-making power is limited to the window that they are functioning in. And if you keep going and pushing too hard, they start making stuff up.

Why does this happen? Let’s ask AI itself, in this case Gemini first, then ChatGTP:

Gemini: LLMs can't remember because their memory is based on a limited "context window" where the previous conversation is tokenized and processed together, and once this limit is exceeded, older information is dropped. Unlike humans, they lack true memory storage, and their apparent recall is achieved by feeding the conversation history back into the prompt in each turn. This short-term memory limitation can cause models to "forget" details, leading to a gradual distortion or "drift" over long conversations.

ChatGTP (a more literary attempt to explain): It’s like talking to someone who can only remember what’s on a whiteboard in front of them — once it’s erased, the memory is lost (that sounds more like Christopher Nolan’s Memento than The Fly).

But the point is, it’s true. LLM’s don’t remember. They can’t. At the very simplest level, they aren’t built that way. They process words or data, computer probabilities and generate a response. They execute each request the same way because that’s how they’ve been told to work.And yet hmmmm, here comes a company called CoLab Software, saying its AutoReview function can remember. What do we make of that?What CoLab makes is a software for manufacturers and mechanical engineers that includes a Design Engagement System that works with your existing CAD and within your current PLM when doing product development. The additional AutoReview component is an element that makes multiple promises. But some of those promises sound an awful lot like CoLab is saying it can remember:

With CoLab, you upload a model or drawing and AutoReview automatically surfaces lessons learned from previous reviews. Your team can quickly apply past decisions to new designs without starting every review from scratch.

Or

With CoLab, senior engineers leave feedback once, so it’s stored forever. Then, AutoReview recalls that feedback when similar parts show up again. So, your team sees why the design is the way it is and applies the same thinking to new iterations.

Okay, so it’s “recalls” instead of “remembers.” But the point is clear: CoLab says that it can help use AI to remember – sorry, recall – your feedback as a design engineer and then apply it.

Well, wait a minute, you must be thinking. I thought we just established that’s not possible. LLMs don’t remember. So this must be marketing hype run amok.

Let’s find out. For answers we turn to Shahed Saleh, Senior Product Manager at CoLab’s AI team, and an engineer who has designed social robots, prosthetics, and AI-driven defect detection systems for manufacturing lines.

And you know what: It turns out LLMs can remember. They just need a little help.

LLMs are really good at ingesting and synthesising large amounts of data. When given access to a bunch of context, they can present patterns and trends using natural language faster than you and I could.

How do LLMs seem like they know so much if they can’t remember how humans can?

Shahed: LLMs are really good at ingesting and synthesising large amounts of data. When given access to a bunch of context, they can present patterns and trends using natural language faster than you and I could.

When users chat with just an LLM and not AutoReview, the answers they get back are limited based on the past training the LLM had and the context given to it in that specific chat. And there's no clear way that the LLM can then connect that exchange into future chats. It's like a point in time knowledge exchange between the user and the LLM.

So let's say CoLab has 10 files with company A and we’re working with our AutoReview AI function. Now we add file 11. Does the AI look at 1 to 10 before it looks at 11? Or just starts with 11?

Shahed: Right now AutoReview looks at each file individually. It's because we're doing all of the pre-processing work to break down the information in each file before feeding it to the LLM. It has the engineering design information in a format where it can go through and do the review more effectively.

So LLM’s can only work in that “moment of time” in the conversation. So is CoLab over-stating its position? Can its AutoReview function remember?

Shahed: The difference with what we're doing is AutoReview uses LLMs to generate feedback. The feedback items themselves are still trackable in the Design Engagement System (DES). They're objects that exist within CoLab and can be retained as information. Users can give reinforcement feedback in the form of a thumbs up or thumbs down to indicate how correct or valuable generated feedback is..

That gives us the basis for a system that can learn. We have feedback objects that are stored with user reinforcement. We will eventually do the work to take that user reinforcement and summarize it for future AutoReview runs that work like this: Here's what happened in the past on similar files. Here's what you did well (thumbs up) and here's what you didn't do well (thumbs down). Then we ask the LLM to take that into consideration when you go and try to review other files from now on.

We're taking its response, creating an object out of it that is AutoReview feedback, and then storing that and using that to make the system smarter long term.

There you have it. The two systems work in tandem in order to give the LLM a way to learn and remember. On the most simple level, CoLab continually stores information and feeds it back to the LLMs to provide it with sufficient context to make good decisions. We have added a brain capable of retaining information for an LLM.

CoLab works in tandem with the AI to ensure that it’s able to understand the full content of what the design drawing is as well as incorporating feedback.

So it doesn’t “remember” the same way you and I remember. It remembers in the sense you remember (CoLab) and then you provide me with good instruction from that information (CoLab again) and then the AI does the work.